News Feed

Semua Kabar

The case for embedding audit trails in AI systems before scaling

Join the event trusted by enterprise leaders for nearly two decades. VB Transform brings together the people building real enterprise AI strategy.Learn more

Editor’s note: Emilia will lead an editorial roundtable on this topic at VB Transform this month.Register today.

Orchestration frameworks for AI services serve multiple functions for enterprises. They not only set out how applications or agents flow together, but they should also let administrators manage workflows and agents and audit their systems.

As enterprises begin to scale their AI services and put these into production, building a manageable, traceable, auditable androbust pipelineensures their agents run exactly as they’re supposed to. Without these controls, organizations may not be aware of what is happening in their AI systems and may only discover the issue too late, when something goes wrong or they fail to comply with regulations.

Kevin Kiley, president of enterprise orchestration companyAiria, told VentureBeat in an interview that frameworks must include auditability and traceability.

“It’s critical to have that observability and be able to go back to the audit log and show what information was provided at what point again,” Kiley said. “You have to know if it was a bad actor, or an internal employee who wasn’t aware they were sharing information or if it was a hallucination. You need a record of that.”

Ideally, robustness and audit trails should be built into AI systems at a very early stage. Understanding the potential risks of a new AI application or agent and ensuring they continue to perform to standards before deployment would help ease concerns around putting AI into production.

However, organizations did not initially design their systems withtraceability and auditability in mind. Many AI pilot programs began life as experiments started without an orchestration layer or an audit trail.

The big question enterprises now face is how to manage all the agents and applications,ensure their pipelines remain robustand, if something goes wrong, they know what went wrong and monitor AI performance.

Before building any AI application, however, experts said organizations need totake stock of their data. If a company knows which data they’re okay with AI systems to access and which data they fine-tuned a model with, they have that baseline to compare long-term performance with.

“When you run some of those AI systems, it’s more about, what kind of data can I validate that my system’s actually running properly or not?” Yrieix Garnier, vice president of products atDataDog, told VentureBeat in an interview. “That’s very hard to actually do, to understand that I have the right system of reference to validate AI solutions.”

Once the organization identifies and locates its data, it needs to establish dataset versioning — essentially assigning a timestamp or version number — to make experiments reproducible and understand what the model has changed. These datasets and models, any applications that use these specific models or agents, authorized users and the baseline runtime numbers can be loaded into either the orchestration or observability platform.

Just like when choosing foundation models to build with, orchestration teams need to consider transparency and openness. While some closed-source orchestration systems have numerous advantages, more open-source platforms could also offer benefits that some enterprises value, such as increased visibility into decision-making systems.

Open-source platforms likeMLFlow,LangChainandGrafanaprovide agents and models with granular and flexible instructions and monitoring. Enterprises can choose to develop their AI pipeline through a single, end-to-end platform, such as DataDog, or utilize various interconnected tools fromAWS.

Another consideration for enterprises is to plug in a system that maps agents and application responses to compliance tools or responsible AI policies. AWS andMicrosoftboth offer services that track AI tools and how closely they adhere to guardrails and other policies set by the user.

Kiley said one consideration for enterprises when building these reliable pipelines revolves around choosing a more transparent system. For Kiley, not having any visibility into how AI systems work won’t work.

“Regardless of what the use case or even the industry is, you’re going to have those situations where you have to have flexibility, and a closed system is not going to work. There are providers out there that’ve great tools, but it’s sort of a black box. I don’t know how it’s arriving at these decisions. I don’t have the ability to intercept or interject at points where I might want to,” he said.

I’ll be leading an editorial roundtable atVB Transform 2025in San Francisco, June 24-25, called “Best practices to build orchestration frameworks for agentic AI,” and I’d love to have you join the conversation.Register today.

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Thanks for subscribing. Check out moreVB newsletters here.

Beyond GPT architecture: Why Google’s Diffusion approach could reshape LLM deployment

Join the event trusted by enterprise leaders for nearly two decades. VB Transform brings together the people building real enterprise AI strategy.Learn more

Last month, along with a comprehensive suite ofnew AI toolsand innovations,Google DeepMindunveiledGemini Diffusion. This experimental research model uses a diffusion-based approach to generate text. Traditionally, large language models (LLMs) like GPT and Gemini itself have relied on autoregression, a step-by-step approach where each word is generated based on the previous one.Diffusion language models (DLMs), also known as diffusion-based large language models (dLLMs), leverage a method more commonly seen in image generation, starting with random noise and gradually refining it into a coherent output. This approach dramatically increases generation speed and can improve coherency and consistency.

Gemini Diffusion is currently available as an experimental demo;sign up for the waitlisthere to get access.

(Editor’s note: We’ll be unpacking paradigm shifts like diffusion-based language models—and what it takes to run them in production—atVB Transform, June 24–25 in San Francisco, alongside Google DeepMind, LinkedIn and other enterprise AI leaders.)

Diffusion and autoregression are fundamentally different approaches. The autoregressive approach generates text sequentially, with tokens predicted one at a time. While this method ensures strong coherence and context tracking, it can be computationally intensive and slow, especially for long-form content.

Diffusion models, by contrast, begin with random noise, which is gradually denoised into a coherent output. When applied to language, the technique has several advantages. Blocks of text can be processed in parallel, potentially producing entire segments or sentences at a much higher rate.

Gemini Diffusion can reportedly generate 1,000-2,000 tokens per second. In contrast, Gemini 2.5 Flash has an average output speed of 272.4 tokens per second. Additionally, mistakes in generation can be corrected during the refining process, improving accuracy and reducing the number of hallucinations. There may be trade-offs in terms of fine-grained accuracy and token-level control; however, the increase in speed will be a game-changer for numerous applications.

During training, DLMs work by gradually corrupting a sentence with noise over many steps, until the original sentence is rendered entirely unrecognizable. The model is then trained to reverse this process, step by step, reconstructing the original sentence from increasingly noisy versions. Through the iterative refinement, it learns to model the entire distribution of plausible sentences in the training data.

While the specifics of Gemini Diffusion have not yet been disclosed, the typical training methodology for a diffusion model involves these key stages:

Forward diffusion:With each sample in the training dataset, noise is added progressively over multiple cycles (often 500 to 1,000) until it becomes indistinguishable from random noise.

Reverse diffusion:The model learns to reverse each step of the noising process, essentially learning how to “denoise” a corrupted sentence one stage at a time, eventually restoring the original structure.

This process is repeated millions of times with diverse samples and noise levels, enabling the model to learn a reliable denoising function.

Once trained, the model is capable of generating entirely new sentences. DLMs generally require a condition or input, such as a prompt, class label, or embedding, to guide the generation towards desired outcomes. The condition is injected into each step of the denoising process, which shapes an initial blob of noise into structured and coherent text.

In an interview with VentureBeat, Brendan O’Donoghue, research scientist at Google DeepMind and one of the leads on the Gemini Diffusion project, elaborated on some of the advantages of diffusion-based techniques when compared to autoregression. According to O’Donoghue, the major advantages of diffusion techniques are the following:

O’Donoghue also noted the main disadvantages: “higher cost of serving and slightly higher time-to-first-token (TTFT), since autoregressive models will produce the first token right away. For diffusion, the first token can only appear when the entire sequence of tokens is ready.”

Google says Gemini Diffusion’s performance iscomparable to Gemini 2.0 Flash-Lite.

* Non-agentic evaluation (single turn edit only), max prompt length of 32K.

The two models were compared using several benchmarks, with scores based on how many times the model produced the correct answer on the first try. Gemini Diffusion performed well in coding and mathematics tests, while Gemini 2.0 Flash-lite had the edge on reasoning, scientific knowledge, and multilingual capabilities.

As Gemini Diffusion evolves, there’s no reason to think that its performance won’t catch up with more established models. According to O’Donoghue, the gap between the two techniques is “essentially closed in terms of benchmark performance, at least at the relatively small sizes we have scaled up to. In fact, there may be some performance advantage for diffusion in some domains where non-local consistency is important, for example, coding and reasoning.”

VentureBeat was granted access to the experimental demo. When putting Gemini Diffusion through its paces, the first thing we noticed was the speed. When running the suggested prompts provided by Google, including building interactive HTML apps like Xylophone and Planet Tac Toe, each request completed in under three seconds, with speeds ranging from 600 to 1,300 tokens per second.

To test its performance with a real-world application, we asked Gemini Diffusion to build a video chat interface with the following prompt:

In less than two seconds, Gemini Diffusion created a working interface with a video preview and an audio meter.

Though this was not a complex implementation, it could be the start of an MVP that can be completed with a bit of further prompting. Note that Gemini 2.5 Flash also produced a working interface, albeit at a slightly slower pace (approximately seven seconds).

Gemini Diffusion also features “Instant Edit,” a mode where text or code can be pasted in and edited in real-time with minimal prompting. Instant Edit is effective for many types of text editing, including correcting grammar, updating text to target different reader personas, or adding SEO keywords. It is also useful for tasks such as refactoring code, adding new features to applications, or converting an existing codebase to a different language.

It’s safe to say that any application that requires a quick response time stands to benefit from DLM technology. This includes real-time and low-latency applications, such as conversational AI and chatbots, live transcription and translation, or IDE autocomplete and coding assistants.According to O’Donoghue, with applications that leverage “inline editing, for example, taking a piece of text and making some changes in-place, diffusion models are applicable in ways autoregressive models aren’t.” DLMs also have an advantage with reason, math, and coding problems, due to “the non-causal reasoning afforded by the bidirectional attention.”DLMs are still in their infancy; however, the technology can potentially transform how language models are built. Not only do they generate text at a much higher rate than autoregressive models, but their ability to go back and fix mistakes means that, eventually, they may also produce results with greater accuracy.

Gemini Diffusion enters a growing ecosystem of DLMs, with two notable examples beingMercury, developed by Inception Labs, andLLaDa, an open-source model from GSAI. Together, these models reflect the broader momentum behind diffusion-based language generation and offer a scalable, parallelizable alternative to traditional autoregressive architectures.

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Thanks for subscribing. Check out moreVB newsletters here.

Do reasoning models really “think” or not? Apple research sparks lively debate, response

Join the event trusted by enterprise leaders for nearly two decades. VB Transform brings together the people building real enterprise AI strategy.Learn more

Apple’s machine-learning group set off a rhetorical firestorm earlier this month with its release of “The Illusion of Thinking,” a 53-page research paper arguing that so-called large reasoning models (LRMs) or reasoning large language models (reasoning LLMs) such as OpenAI’s “o” series and Google’s Gemini-2.5 Pro and Flash Thinking don’t actually engage in independent “thinking” or “reasoning” from generalized first principles learned from their training data.

Instead, the authors contend, these reasoning LLMs are actually performing a kind of “pattern matching” and their apparent reasoning ability seems to fall apart once a task becomes too complex, suggesting that their architecture and performance is not a viable path to improving generative AI to the point that it is artificial generalized intelligence (AGI), which OpenAI defines as a model that outperforms humans at most economically valuable work, or superintelligence, AI even smarter than human beings can comprehend.

Unsurprisingly, the paper immediately circulated widely among the machine learning community on X and many readers’ initial reactions were to declare that Apple had effectively disproven much of the hype around this class of AI: “Apple just proved AI ‘reasoning’ models like Claude, DeepSeek-R1, and o3-mini don’t actually reason at all,”declared Ruben Hassid, creator of EasyGen, an LLM-driven LinkedIn post auto writing tool. “They just memorize patterns really well.”

But now today,a new paper has emerged, the cheekily titled “The Illusion of The Illusion of Thinking” — importantly, co-authored by a reasoning LLM itself, Claude Opus 4 and Alex Lawsen, a human being and independent AI researcher and technical writer — that includes many criticisms from the larger ML community about the paper and effectively argues that the methodologies and experimental designs the Apple Research team used in their initial work are fundamentally flawed.

While we here at VentureBeat are not ML researchers ourselves and not prepared to say the Apple Researchers are wrong, the debate has certainly been a lively one and the issue about the capabilities of LRMs or reasoner LLMs compared to human thinking seems far from settled.

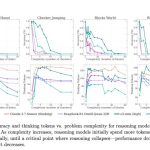

Using four classic planning problems — Tower of Hanoi, Blocks World, River Crossing and Checkers Jumping — Apple’s researchers designed a battery of tasks that forced reasoning models to plan multiple moves ahead and generate complete solutions.

These games were chosen for their long history in cognitive science and AI research and their ability to scale in complexity as more steps or constraints are added. Each puzzle required the models to not just produce a correct final answer, but to explain their thinking along the way using chain-of-thought prompting.

As the puzzles increased in difficulty, the researchers observed a consistent drop in accuracy across multiple leading reasoning models. In the most complex tasks, performance plunged to zero. Notably, the length of the models’ internal reasoning traces—measured by the number of tokens spent thinking through the problem—also began to shrink. Apple’s researchers interpreted this as a sign that the models were abandoning problem-solving altogether once the tasks became too hard, essentially “giving up.”

The timing of the paper’s release,just ahead of Apple’s annual Worldwide Developers Conference (WWDC), added to the impact. It quickly went viral across X, where many interpreted the findings as a high-profile admission that current-generation LLMs are still glorified autocomplete engines, not general-purpose thinkers. This framing, while controversial, drove much of the initial discussion and debate that followed.

Among the most vocal critics of the Apple paperwas ML researcher and X user @scaling01(aka “Lisan al Gaib”), who posted multiple threads dissecting the methodology.

Inone widely shared post, Lisan argued that the Apple team conflated token budget failures with reasoning failures, noting that “all models will have 0 accuracy with more than 13 disks simply because they cannot output that much!”

For puzzles like Tower of Hanoi, he emphasized, the output size grows exponentially, while the LLM context windows remain fixed, writing “just because Tower of Hanoi requires exponentially more steps than the other ones, that only require quadratically or linearly more steps, doesn’t mean Tower of Hanoi is more difficult” and convincingly showed that models like Claude 3 Sonnet and DeepSeek-R1 often produced algorithmically correct strategies in plain text or code—yet were still marked wrong.

Another posthighlighted that even breaking the task down into smaller, decomposed steps worsened model performance—not because the models failed to understand, but because they lacked memory of previous moves and strategy.

“The LLM needs the history and a grand strategy,” he wrote, suggesting the real problem was context-window size rather than reasoning.

I raisedanother important grain of salt myself on X: Apple never benchmarked the model performance against human performance on the same tasks. “Am I missing it, or did you not compare LRMs to human perf[ormance] on [the] same tasks?? If not, how do you know this same drop-off in perf doesn’t happen to people, too?” I asked the researchers directly in a thread tagging the paper’s authors. I also emailed them about this and many other questions, but they have yet to respond.

Others echoed that sentiment, noting that human problem solvers also falter on long, multistep logic puzzles, especially without pen-and-paper tools or memory aids. Without that baseline, Apple’s claim of a fundamental “reasoning collapse” feels ungrounded.

Several researchers also questioned the binary framing of the paper’s title and thesis—drawing a hard line between “pattern matching” and “reasoning.”

Alexander Doriaaka Pierre-Carl Langlais, an LLM trainer at energy efficient French AI startupPleias, said the framingmisses the nuance, arguing that models might be learning partial heuristics rather than simply matching patterns.

Ok I guess I have to go through that Apple paper.My main issue is the framing which is super binary: "Are these models capable of generalizable reasoning, or are they leveraging different forms of pattern matching?" Or what if they only caught genuine yet partial heuristics.pic.twitter.com/GZE3eG7WlM

Ethan Mollick, the AI focused professor at University of Pennsylvania’s Wharton School of Business, called the idea that LLMs are “hitting a wall” premature, likening it to similar claims about “model collapse” that didn’t pan out.

Meanwhile, critics like@arithmoquinewere more cynical, suggesting that Apple—behind the curve on LLMs compared to rivals like OpenAI and Google—might be trying to lower expectations,” coming up with research on “how it’s all fake and gay and doesn’t matter anyway” they quipped, pointing out Apple’s reputation with now poorly performing AI products like Siri.

In short, while Apple’s study triggered a meaningful conversation about evaluation rigor, it also exposed a deep rift over how much trust to place in metrics when the test itself might be flawed.

In other words, the models may have understood the puzzles but ran out of “paper” to write the full solution.

“Token limits, not logic, froze the models,” wrote Carnegie Mellon researcher Rohan Paul ina widely shared thread summarizing the follow-up tests.

Yet not everyone is ready to clear LRMs of the charge. Some observers point out that Apple’s study still revealed three performance regimes — simple tasks where added reasoning hurts, mid-range puzzles where it helps, and high-complexity cases where both standard and “thinking” models crater.

Others view the debate as corporate positioning, noting that Apple’s own on-device “Apple Intelligence” models trail rivals on many public leaderboards.

In response to Apple’s claims, a new paper titled “The Illusion of the Illusion of Thinking” was released on arXiv by independent researcher and technical writerAlex Lawsen of the nonprofit Open Philanthropy, in collaboration with Anthropic’s Claude Opus 4.

The paper directly challenges the original study’s conclusion that LLMs fail due to an inherent inability to reason at scale. Instead, the rebuttal presents evidence that the observed performance collapse was largely a by-product of the test setup—not a true limit of reasoning capability.

Lawsen and Claude demonstrate that many of the failures in the Apple study stem from token limitations. For example, in tasks like Tower of Hanoi, the models must print exponentially many steps — over 32,000 moves for just 15 disks — leading them to hit output ceilings.

The rebuttal points out that Apple’s evaluation script penalized these token-overflow outputs as incorrect, even when the models followed a correct solution strategy internally.

The authors also highlight several questionable task constructions in the Apple benchmarks. Some of the River Crossing puzzles, they note, are mathematically unsolvable as posed, and yet model outputs for these cases were still scored. This further calls into question the conclusion that accuracy failures represent cognitive limits rather than structural flaws in the experiments.

To test their theory, Lawsen and Claude ran new experiments allowing models to give compressed, programmatic answers. When asked to output a Lua function that could generate the Tower of Hanoi solution—rather than writing every step line-by-line—models suddenly succeeded on far more complex problems. This shift in format eliminated the collapse entirely, suggesting that the models didn’t fail to reason. They simply failed to conform to an artificial and overly strict rubric.

The back-and-forth underscores a growing consensus: evaluation design is now as important as model design.

Requiring LRMs to enumerate every step may test their printers more than their planners, while compressed formats, programmatic answers or external scratchpads give a cleaner read on actual reasoning ability.

The episode also highlights practical limits developers face as they ship agentic systems—context windows, output budgets and task formulation can make or break user-visible performance.

For enterprise technical decision makers building applications atop reasoning LLMs, this debate is more than academic. It raises critical questions about where, when, and how to trust these models in production workflows—especially when tasks involve long planning chains or require precise step-by-step output.

If a model appears to “fail” on a complex prompt, the problem may not lie in its reasoning ability, but in how the task is framed, how much output is required, or how much memory the model has access to. This is particularly relevant for industries building tools like copilots, autonomous agents, or decision-support systems, where both interpretability and task complexity can be high.

Understanding the constraints of context windows, token budgets, and the scoring rubrics used in evaluation is essential for reliable system design. Developers may need to consider hybrid solutions that externalize memory, chunk reasoning steps, or use compressed outputs like functions or code instead of full verbal explanations.

Most importantly, the paper’s controversy is a reminder that benchmarking and real-world application are not the same. Enterprise teams should be cautious of over-relying on synthetic benchmarks that don’t reflect practical use cases—or that inadvertently constrain the model’s ability to demonstrate what it knows.

Ultimately, the big takeaway for ML researchers is that before proclaiming an AI milestone—or obituary—make sure the test itself isn’t putting the system in a box too small to think inside.

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Thanks for subscribing. Check out moreVB newsletters here.

Just add humans: Oxford medical study underscores the missing link in chatbot testing

Join the event trusted by enterprise leaders for nearly two decades. VB Transform brings together the people building real enterprise AI strategy.Learn more

Headlines have been blaring it for years: Large language models (LLMs) can not only pass medical licensing exams but also outperform humans. GPT-4 could correctly answer U.S. medical exam licensing questions 90% of the time, even in the prehistoric AI days of 2023. Since then, LLMs have gone on to best theresidents taking those examsandlicensed physicians.

Move over, Doctor Google, make way for ChatGPT, M.D. But you may want more than a diploma from the LLM you deploy for patients. Like an ace medical student who can rattle off the name of every bone in the hand but faints at the first sight of real blood, an LLM’s mastery of medicine does not always translate directly into the real world.

Apaperby researchers atthe University of Oxfordfound that while LLMs could correctly identify relevant conditions 94.9% of the time when directly presented with test scenarios, human participants using LLMs to diagnose the same scenarios identified the correct conditions less than 34.5% of the time.

Perhaps even more notably, patients using LLMs performed even worse than a control group that was merely instructed to diagnose themselves using “any methods they would typically employ at home.” The group left to their own devices was 76% more likely to identify the correct conditions than the group assisted by LLMs.

The Oxford study raises questions about the suitability of LLMs for medical advice and the benchmarks we use to evaluate chatbot deployments for various applications.

Led by Dr. Adam Mahdi, researchers at Oxford recruited 1,298 participants to present themselves as patients to an LLM. They were tasked with both attempting to figure out what ailed them and the appropriate level of care to seek for it, ranging from self-care to calling an ambulance.

Each participant received a detailed scenario, representing conditions from pneumonia to the common cold, along with general life details and medical history. For instance, one scenario describes a 20-year-old engineering student who develops a crippling headache on a night out with friends. It includes important medical details (it’s painful to look down) and red herrings (he’s a regular drinker, shares an apartment with six friends, and just finished some stressful exams).

The study tested three different LLMs. The researchers selectedGPT-4oon account of its popularity,Llama 3for its open weights andCommand R+for its retrieval-augmented generation (RAG) abilities, which allow it to search the open web for help.

Participants were asked to interact with the LLM at least once using the details provided, but could use it as many times as they wanted to arrive at their self-diagnosis and intended action.

Behind the scenes, a team of physicians unanimously decided on the “gold standard” conditions they sought in every scenario, and the corresponding course of action. Our engineering student, for example, is suffering from a subarachnoid haemorrhage, which should entail an immediate visit to the ER.

While you might assume an LLM that can ace a medical exam would be the perfect tool to help ordinary people self-diagnose and figure out what to do, it didn’t work out that way. “Participants using an LLM identified relevant conditions less consistently than those in the control group, identifying at least one relevant condition in at most 34.5% of cases compared to 47.0% for the control,” the study states. They also failed to deduce the correct course of action, selecting it just 44.2% of the time, compared to 56.3% for an LLM acting independently.

Looking back at transcripts, researchers found that participants both provided incomplete information to the LLMs and the LLMs misinterpreted their prompts. For instance, one user who was supposed to exhibit symptoms of gallstones merely told the LLM: “I get severe stomach pains lasting up to an hour, It can make me vomit and seems to coincide with a takeaway,” omitting the location of the pain, the severity, and the frequency. Command R+ incorrectly suggested that the participant was experiencing indigestion, and the participant incorrectly guessed that condition.

Even when LLMs delivered the correct information, participants didn’t always follow its recommendations. The study found that 65.7% of GPT-4o conversations suggested at least one relevant condition for the scenario, but somehow less than 34.5% of final answers from participants reflected those relevant conditions.

This study is useful, but not surprising, according to Nathalie Volkheimer, a user experience specialist at theRenaissance Computing Institute (RENCI), University of North Carolina at Chapel Hill.

“For those of us old enough to remember the early days of internet search, this is déjà vu,” she says. “As a tool, large language models require prompts to be written with a particular degree of quality, especially when expecting a quality output.”

She points out that someone experiencing blinding pain wouldn’t offer great prompts. Although participants in a lab experiment weren’t experiencing the symptoms directly, they weren’t relaying every detail.

“There is also a reason why clinicians who deal with patients on the front line are trained to ask questions in a certain way and a certain repetitiveness,” Volkheimer goes on. Patients omit information because they don’t know what’s relevant, or at worst, lie because they’re embarrassed or ashamed.

Can chatbots be better designed to address them? “I wouldn’t put the emphasis on the machinery here,” Volkheimer cautions. “I would consider the emphasis should be on the human-technology interaction.” The car, she analogizes, was built to get people from point A to B, but many other factors play a role. “It’s about the driver, the roads, the weather, and the general safety of the route. It isn’t just up to the machine.”

The Oxford study highlights one problem, not with humans or even LLMs, but with the way we sometimes measure them—in a vacuum.

When we say an LLM can pass a medical licensing test, real estate licensing exam, or a state bar exam, we’re probing the depths of its knowledge base using tools designed to evaluate humans. However, these measures tell us very little about how successfully these chatbots will interact with humans.

“The prompts were textbook (as validated by the source and medical community), but life and people are not textbook,” explains Dr. Volkheimer.

Imagine an enterprise about to deploy a support chatbot trained on its internal knowledge base. One seemingly logical way to test that bot might simply be to have it take the same test the company uses for customer support trainees: answering prewritten “customer” support questions and selecting multiple-choice answers. An accuracy of 95% would certainly look pretty promising.

Then comes deployment: Real customers use vague terms, express frustration, or describe problems in unexpected ways. The LLM, benchmarked only on clear-cut questions, gets confused and provides incorrect or unhelpful answers. It hasn’t been trained or evaluated on de-escalating situations or seeking clarification effectively. Angry reviews pile up. The launch is a disaster, despite the LLM sailing through tests that seemed robust for its human counterparts.

This study serves as a critical reminder for AI engineers and orchestration specialists: if an LLM is designed to interact with humans, relying solely on non-interactive benchmarks can create a dangerous false sense of security about its real-world capabilities. If you’re designing an LLM to interact with humans, you need to test it with humans – not tests for humans. But is there a better way?

The Oxford researchers recruited nearly 1,300 people for their study, but most enterprises don’t have a pool of test subjects sitting around waiting to play with a new LLM agent. So why not just substitute AI testers for human testers?

Mahdi and his team tried that, too, with simulated participants. “You are a patient,” they prompted an LLM, separate from the one that would provide the advice. “You have to self-assess your symptoms from the given case vignette and assistance from an AI model. Simplify terminology used in the given paragraph to layman language and keep your questions or statements reasonably short.” The LLM was also instructed not to use medical knowledge or generate new symptoms.

These simulated participants then chatted with the same LLMs the human participants used. But they performed much better. On average, simulated participants using the same LLM tools nailed the relevant conditions 60.7% of the time, compared to below 34.5% in humans.

In this case, it turns out LLMs play nicer with other LLMs than humans do, which makes them a poor predictor of real-life performance.

Given the scores LLMs could attain on their own, it might be tempting to blame the participants here. After all, in many cases, they received the right diagnoses in their conversations with LLMs, but still failed to correctly guess it. But that would be a foolhardy conclusion for any business, Volkheimer warns.

“In every customer environment, if your customers aren’t doing the thing you want them to, the last thing you do is blame the customer,” says Volkheimer. “The first thing you do is ask why. And not the ‘why’ off the top of your head: but a deep investigative, specific, anthropological, psychological, examined ‘why.’ That’s your starting point.”

You need to understand your audience, their goals, and the customer experience before deploying a chatbot, Volkheimer suggests. All of these will inform the thorough, specialized documentation that will ultimately make an LLM useful. Without carefully curated training materials, “It’s going to spit out some generic answer everyone hates, which is why people hate chatbots,” she says. When that happens, “It’s not because chatbots are terrible or because there’s something technically wrong with them. It’s because the stuff that went in them is bad.”

“The people designing technology, developing the information to go in there and the processes and systems are, well, people,” says Volkheimer. “They also have background, assumptions, flaws and blindspots, as well as strengths. And all those things can get built into any technological solution.”

If you want to impress your boss, VB Daily has you covered. We give you the inside scoop on what companies are doing with generative AI, from regulatory shifts to practical deployments, so you can share insights for maximum ROI.

Thanks for subscribing. Check out moreVB newsletters here.

Top 9 Free AI Tools That Make Your Life Easier

First one on the list is copy.ai. It is an AI based copy writer tool. Basically what a copywriter tool does is, it gives you content that you can post on your blog or video when you give it a few descriptions about the topic you want content on.So copy ai can help you write instagram captions gives you blog idea, product descriptions, facebook content, startup ideas, viral ideas, a lot of things it can do, you just make an account in this website, then select a tool and fill inthe necessary description and the AI will generate content on what you ask for.

For tutorials go to their officialYoutube channel.An awesome tool that is going to be really handy in the future.

Hotpot.ai offers a collection of AI tools for designers, as well as for anyone,it has an “AI picture restorer” which removes scratches ,and basically restores your old photointo amazing pictures and makes it look brand new.

Ai picture colorizer , turns your black andwhite photo into color. And there is a background remover tool, picture enlarger and a lot more for designers, check it out,and explore all the tools.

Deep-nostalgia became very popular on the internet when people started

making reaction videos of their parents reacting to animated pictures of their grandparents. Sodeep – nostalgia is a very cool app, that will animate any photo of a person.

So what makes itreally cool is that fact that you can upload an old photo of your family and see them animateand living. Which is pretty cool and creepy at the same time if they are dead already.. Reallyamazing service from myheritage, I created a lot of cool animations with my old photos aswell as with the photos of my grandparents.

distinct and catchy profile picture can make all the difference. So that's where pfpmakercomes in. it a free online tool to create amazing professional profile pictures that fitsyou. It generates a lot of profile pictures and you can also make small changes to alreadycreated profile pictures if you want to,as well.

thing ever, so brandmark.io makes it super easy. It will create a logo for your brand within2 clicks. So you goto this website. Type in your brand name and slogan if you have any, andgive BRAND KEYWORDS that relate to your brand, then pick a color style and done, the ai will

You can also make minor edits to the suggested logos to better fit your needs as well. But to get that png you need to pay a hefty price, but if you are looking for some logo ideas, this is a great place to start.

Bigjpg does the same as deep-image.ai , but this service offers a little bit more options like if your photo is an artwork it scales image differently than normal photos and it supports upto 4x enlargement for free and you can also set noise reduction options. Very good tool,

namelix a try. It's an ai based name generator that will suggest good names for your branddepending on the keyword that you give.. Also logo for your brand. Pretty cool and an amazing piece of tool. So that's been it , those are my favourite free AI based tools that you can use right now,

Which one You like the most Let me know in the Comments below.

Top 9 Free AI Tools That Make Your Life Easier

7 Free Websites Every Content Creator Needs to Know

There’s no excuse not to try this website — it’s free and easy to use!

Visit Exploding Topics From Here

This is a valuable tool when creating new blog posts because it generates catchy headlines for your blog post to catch a reader’s attention.

Visit Headline Studio From Here

Visit Answer The Public From Here

Surfer Seo is free and the interface is very friendly. It's a great tool for anyone who wants to do quick competitor research or check their site's rankings at any time.

Canva offers thousands of free, professionally designed templates that can be customized with just a few clicks. Simply upload your photos to Canva, drag them into the template of your choice, and save the file to your computer.

It is free to use for basic use but if you want access to different fonts or more features, then you need to buy a premium plan.

There is another section of Facebook Audits that is very helpful. This will let you know the interests, hobbies, and activities that people in your target market are most interested in. You can use this information to create content for them about things they will be about as opposed to topics they may not be so keen on.

VisitFacebook Audience Insights From Here

The only cons are that some photos contain people, and Pexels doesn't allow you to remove people from photos. Search your keyword and download as many as you want!

So there you have it. We hope that these specially curated websites will come in handy for content creators and small businesses alike. If you've got a site that should be on this list, let us know! And if you're looking for more content creator resources, then let us know in the comments section below

10 Best Chrome Extensions That Are Perfect for Everyone

Are you a great Chrome user? That’s nice to hear. But first, consider whether or not there are any essential Chrome extensions you are currently missing from your browsing life, so here we're going to share with you 10 Best Chrome Extensions That Are Perfect for Everyone. So Let's Start.

When you have too several passwords to remember, LastPass remembers them for you.

This chrome extension is an easy way to save you time and increase security. It’s a single password manager that will log you into all of your accounts. you simply ought to bear in mind one word: your LastPass password to log in to all or any your accounts.

MozBar is an SEO toolbar extension that makes it easy for you to analyze your web pages' SEO while you surf. You can customize your search so that you see data for a particular region or for all regions. You get data such as website and domain authority and link profile. The status column tells you whether there are any no-followed links to the page.You can also compare link metrics. There is a pro version of MozBar, too.

Grammarly is a real-time grammar checking and spelling tool for online writing. It checks spelling, grammar, and punctuation as you type, and has a dictionary feature that suggests related words. if you use mobile phones for writing than Grammerly also have a mobile keyboard app.

VidIQ is a SaaS product and Chrome Extension that makes it easier to manage and optimize your YouTube channels. It keeps you informed about your channel's performance with real-time analytics and powerful insights.

ColorZilla is a browser extension that allows you to find out the exact color of any object in your web browser. This is especially useful when you want to match elements on your page to the color of an image.

Honey is a chrome extension with which you save each product from the website and notify it when it is available at low price it's one among the highest extensions for Chrome that finds coupon codes whenever you look online.

GMass (or Gmail Mass) permits users to compose and send mass emails using Gmail. it is a great tool as a result of you'll use it as a replacement for a third-party email sending platform. you will love GMass to spice up your emailing functionality on the platform.

It's a Chrome extension for geeks that enables you to highlight and save what you see on the web.

It's been designed by Notion, that could be a Google space different that helps groups craft higher ideas and collaborate effectively.

If you are someone who works online, you need to surf the internet to get your business done. And often there is no time to read or analyze something. But it's important that you do it. Notion Web Clipper will help you with that.

WhatFont is a Chrome extension that allows web designers to easily identify and compare different fonts on a page. The first time you use it on any page, WhatFont will copy the selected page.It Uses this page to find out what fonts are present and generate an image that shows all those fonts in different sizes. Besides the apparent websites like Google or Amazon, you'll conjointly use it on sites wherever embedded fonts ar used.

Similar Web is an SEO add on for both Chrome and Firefox.It allows you to check web site traffic and key metrics for any web site, as well as engagement rate, traffic ranking, keyword ranking, and traffic source. this is often a good tool if you are looking to seek out new and effective SEO ways similarly as analyze trends across the web.

I know everyone knows how to install extension in pc but most of people don't know how to install it in android phone so i will show you how to install it in android

1. Download Kiwi browser from Play Store and then Open it.

2. Tap the three dots at the top right corner and select Extension.

3. Click on (+From Store) to access chrome web store or simple search chrome web store and access it.

4. Once you found an extension click on add to chrome a message will pop-up asking if you wish to confirm your choice. Hit OK to install the extension in the Kiwi browser.

5. To manage extensions on the browser, tap the three dots in the upper right corner. Then select Extensions to access a catalog of installed extensions that you can disable, update or remove with just a few clicks.

Your Chrome extensions should install on Android, but there’s no guarantee all of them will work. Because Google Chrome Extensions are not optimized for Android devices.

We hope this list of 10 best chrome extensions that is perfect for everyone will help you in picking the right Chrome Extensions. We have selected the extensions after matching their features to the needs of different categories of people. Also which extension you like the most let me know in the comment section

Most Frequently Asked Questions About NFTs(Non-Fungible Tokens)

Non-fungible tokens (NFTs) are the most popular digital assets today, capturing the attention of cryptocurrency investors, whales and people from around the world. People find it amazing that some users spend thousands or millions of dollars on a single NFT-based image of a monkey or other token, but you can simply take a screenshot for free. So here we share some freuently asked question about NFTs.

NFT stands for non-fungible token, which is a cryptographic token on a blockchain with unique identification codes that distinguish it from other tokens. NFTs are unique and not interchangeable, which means no two NFTs are the same. NFTs can be a unique artwork, GIF, Images, videos, Audio album. in-game items, collectibles etc.

A blockchain is a distributed digital ledger that allows for the secure storage of data. By recording any kind of information—such as bank account transactions, the ownership of Non-Fungible Tokens (NFTs), or Decentralized Finance (DeFi) smart contracts—in one place, and distributing it to many different computers, blockchains ensure that data can’t be manipulated without everyone in the system being aware.

The value of an NFT comes from its ability to be traded freely and securely on the blockchain, which is not possible with other current digital ownership solutionsThe NFT points to its location on the blockchain, but doesn’t necessarily contain the digital property. For example, if you replace one bitcoin with another, you will still have the same thing. If you buy a non-fungible item, such as a movie ticket, it is impossible to replace it with any other movie ticket because each ticket is unique to a specific time and place.

One of the unique characteristics of non-fungible tokens (NFTs) is that they can be tokenised to create a digital certificate of ownership that can be bought, sold and traded on the blockchain.

As with crypto-currency, records of who owns what are stored on a ledger that is maintained by thousands of computers around the world. These records can’t be forged because the whole system operates on an open-source network.

NFTs also contain smart contracts—small computer programs that run on the blockchain—that give the artist, for example, a cut of any future sale of the token.

Non-fungible tokens (NFTs) aren't cryptocurrencies, but they do use blockchain technology. Many NFTs are based on Ethereum, where the blockchain serves as a ledger for all the transactions related to said NFT and the properties it represents.5) How to make an NFT?

Anyone can create an NFT. All you need is a digital wallet, some ethereum tokens and a connection to an NFT marketplace where you’ll be able to upload and sell your creations

When you purchase a stock in NFT, that purchase is recorded on the blockchain—the bitcoin ledger of transactions—and that entry acts as your proof of ownership.

The value of an NFT varies a lot based on the digital asset up for grabs. People use NFTs to trade and sell digital art, so when creating an NFT, you should consider the popularity of your digital artwork along with historical statistics.

In the year 2021, a digital artist called Pak created an artwork called The Merge. It was sold on the Nifty Gateway NFT market for $91.8 million.

Non-fungible tokens can be used in investment opportunities. One can purchase an NFT and resell it at a profit. Certain NFT marketplaces let sellers of NFTs keep a percentage of the profits from sales of the assets they create.

Many people want to buy NFTs because it lets them support the arts and own something cool from their favorite musicians, brands, and celebrities. NFTs also give artists an opportunity to program in continual royalties if someone buys their work. Galleries see this as a way to reach new buyers interested in art.

There are many places to buy digital assets, like opensea and their policies vary. On top shot, for instance, you sign up for a waitlist that can be thousands of people long. When a digital asset goes on sale, you are occasionally chosen to purchase it.

To mint an NFT token, you must pay some amount of gas fee to process the transaction on the Etherum blockchain, but you can mint your NFT on a different blockchain called Polygon to avoid paying gas fees. This option is available on OpenSea and this simply denotes that your NFT will only be able to trade using Polygon's blockchain and not Etherum's blockchain. Mintable allows you to mint NFTs for free without paying any gas fees.

The answer is no. Non-Fungible Tokens are minted on the blockchain using cryptocurrencies such as Etherum, Solana, Polygon, and so on. Once a Non-Fungible Token is minted, the transaction is recorded on the blockchain and the contract or license is awarded to whoever has that Non-Fungible Token in their wallet.

You can sell your work and creations by attaching a license to it on the blockchain, where its ownership can be transferred. This lets you get exposure without losing full ownership of your work. Some of the most successful projects include Cryptopunks, Bored Ape Yatch Club NFTs, SandBox, World of Women and so on. These NFT projects have gained popularity globally and are owned by celebrities and other successful entrepreneurs. Owning one of these NFTs gives you an automatic ticket to exclusive business meetings and life-changing connections.

That’s a wrap. Hope you guys found this article enlightening. I just answer some question with my limited knowledge about NFTs. If you have any questions or suggestions, feel free to drop them in the comment section below. AlsoI have a question for you, Is bitcoin an NFTs?let me know in The comment section below

- Sebelumnya

- 1

- …

- 1,304

- 1,305

- 1,306

- 1,307

- 1,308

- …

- 1,620

- Berikutnya

Tidak Ada Lagi Postingan yang Tersedia.

Tidak ada lagi halaman untuk dimuat.